STEP 7: More tools, more potential bugs

Welcome to the 7th post of the 6M Challenge of Bug Bounty edition where you are going to learn about more tools by which you can find more potential bugs in your bug bounty career. I hope that you have finished the tasks given in the previous post, i.e.:

- Download and install the Burp Suite if you are on windows

- Configure burp suite for your browser.

- Test the configurations.

finding potential bugs by using tools

Nowtime for information gathering, scanning, and reconnaissance. We’ll be using a few tools to scan through web servers to identify any potential bugs on the surface level. All the information collected at this level could help us later during the core exploitation game. Let’s get going quick, we’ve lots of tools to go through:

1. nmap

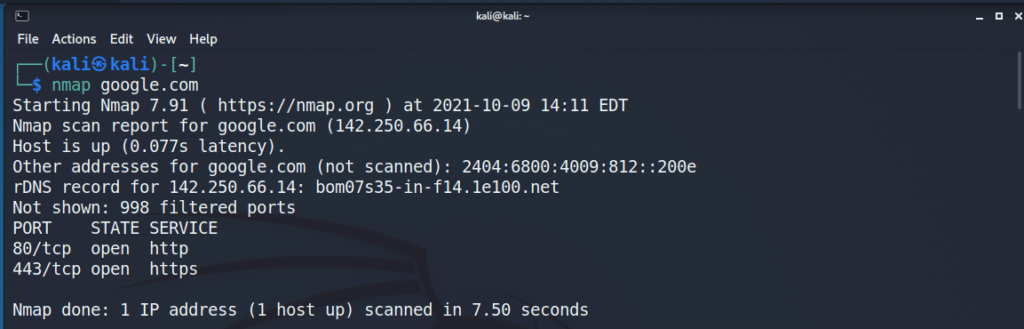

Nmap is a network scanner, which we’re gonna use to scan web servers and look for any potential open ports, for OS versions, versions of applications running the server. Kali has Nmap pre-installed. I’m running a scan on google.com with these commands into Terminal –

nmap google.com

Here are the results, everything seems fine.. only 2 ports are open, HTTP and HTTPS, which are used to deliver webpages to us.

This is an example of a perfectly configured server, with respect to ports.

Let’s add more fun. Here’s an example, where MySQL port was open on a website of Uber, which means if you have a username & password, you’d have been able to access the SQL database of the website.

A hacker found that ONE MORE WEBSITE is hosted on the same IP as uber’s website. And…this website had username & password for Mysql port of the server. So the hacker got access and reported to Uber. Here’s the official report: https://hackerone.com/reports/439223

Nmap does lots of other things, like detecting versions of applications running on the server, checking out OS on the server. I found this pretty short beginner-friendly crash-course type article on Nmap.

We can’t discuss all commands and all kinds of scans here for obvious reasons. So, go read this article by clicking here – https://www.edureka.co/blog/nmap-tutorial/

2. Nikto

Again, pre-installed in Kali. Nikto will look up for files, or application versions we already know to be vulnerable. For example, if one particular version of server software is known to be vulnerable, but a website still uses it, Nikto will tell you when you scan that website, or if Nikto finds the presence of a vulnerable file that could leak info from the server, Nikto will let you know.

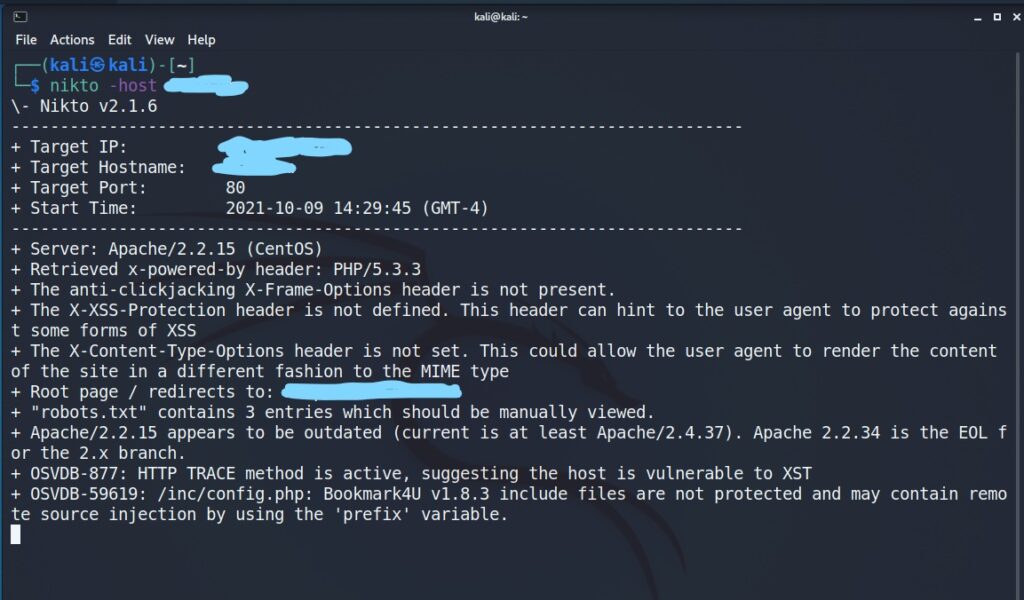

I’m running Nikto on a website whose name I’ll keep hidden since there seem to be some problems with this website. Please note that you shall not use Nikto on a website without permission from the admin. Ready? let’s go.

Type in this command

nikto -host example.com

The website I scanned threw these results:

What do i learn from this scan?

- Apache server version is wayyyyy older than what is considered safe in the industry.

- HTTP Trace method is enabled, which allows us to bypass firewalls of websites, which could potentially allow us to send harmful info to the server.

Read this brilliant article for a full explanation on how it’s done – https://www.blackhillsinfosec.com/three-minutes-with-the-http-trace-method/

PS: The HTTP Trace method is originally used to know what is received by a server when we send an HTTP request. As there could be several layers of filtration. TRACE method helps us to see what does the server receives after all the filtration.

So Nikto helps us gather this kind of information, which can take us closer to the bugs.

3. burp suite spider

Spider “crawls” and scans through the entire website by clicking on each and every link on the website, and tells us the URL of all the folders and files publicly present on the server in a listed manner. The more pages we know of…the more places we know to find bugs at.

How to use spider to find potential bugs?

I’ll be using Spider on hackthissite.org, and it’s perfectly legal to test on this website. So, follow up these steps:

- Turn on burp suite and open Firefox, as we learned in the last post.

- Once Burp Suite is open and connected to firefox, go to hackthissite.org on firefox.

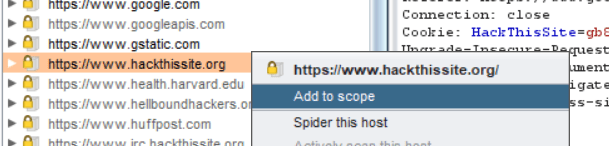

- Once the website is loaded, go to Burp Suite, click on the “Target” tab at the top. On the left-hand side, there is a list of all the websites, this is a list of all the websites from which Firefox has fetched stuff, and which Burp Suite has noticed.

- Look for ‘hackthissite.org’ in that list. Right-click on hackthissite.org and click on “Add to scope”.

- Click on Yes if any such prompt occurs.

- The thing is, Burp automatically keeps enlisting directories of active websites on the browser. So, when you click YES on this prompt, it will stop crawling on websites which aren’t in scope, and crawl only on the websites which are in scope, here it’s hackthissite.org.

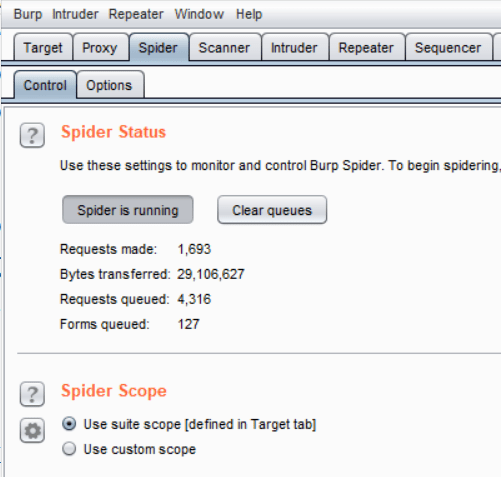

- Next, click on the “Spider” tab at the top. Click on the “Spider is paused” button, turning it on, it might ask you to add credentials for submitting forms.

- Just go with ignore form for now. This is asked because the spider wants to access and enlist as many files and pages as possible, but right now we’re just learning a basic “How to”, when you do this on the real website, just enter the email password of your test account for that website.

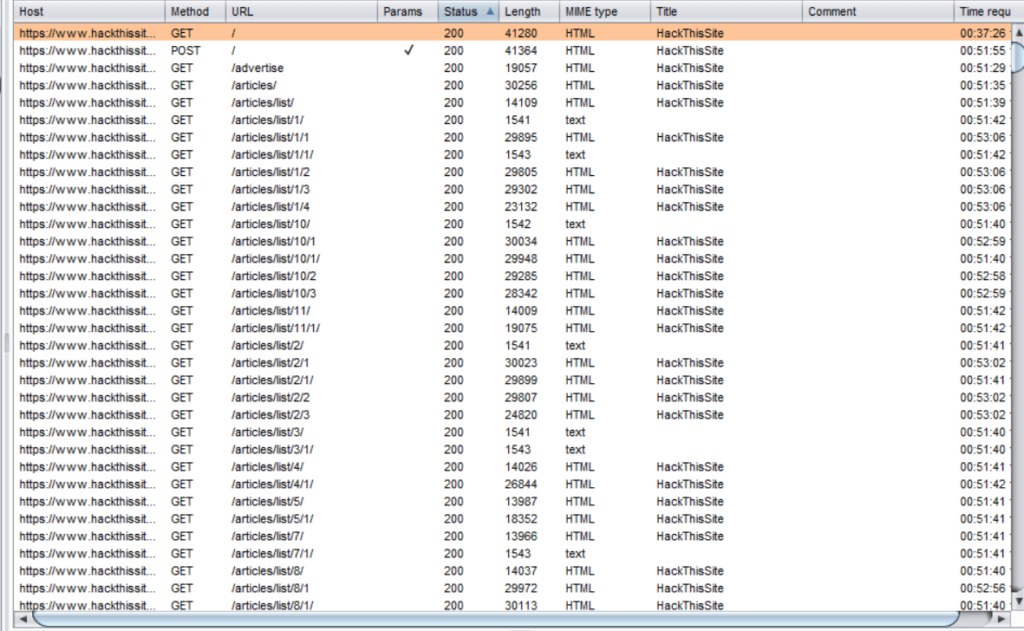

- Note that Spider is a bot, so it might ask for assistance once in a while. But it will do most of the work of enlisting all the directories & files present. After running the spider for a few seconds, it has sent over 1600 requests,

- And it has enlisted lots of pages for me, which look like this-

Most of these pages are articles, but when doing in a serious environment, we often come across a page, which should be hidden. For example, the public admin login system page should be hidden. And if it’s accessible, it’s dangerous. Similarly, maybe a text file containing something sensitive. Possibilities are infinite.

And this Spider is the main reason I asked y’all to install Burp 1.7 instead of Burp 2, last week.

This report is an example of stumbling upon sensitive files, which shouldn’t be public. The spider can help you find such files. https://hackerone.com/reports/273726

PS: Avoid using Spider on websites like that of social media, since they’ve literally billions of pages. Our teenie-tiny computer and internet connection would take years to go through all of it.

Huh.. let’s end this here for today.

Your tasks for this week are-

tasks to be completed:

- Read up and try commands of Nmap on several websites.

- Try usage of Nikto.

- Use Burp Suite spider, see what kind of files you can find on your favorite websites.

Till next week. Bub-bye.